These are the main SPQR development tasks.

Define an ontology

Ongoing

This is a potentially huge task but will be tackled in an iterative fashion. We will focus on a local “spqr” ontology and find taxonomies of classes and properties that suit our datasets best. When we have our ontology ready (encoded in an OWL file) we can start mapping it to other ontologies and vocabularies (see the Ontology Mapping task below).

For example we can define:

spqr:ancientFindSpot (spqr:Object -> spqr:Place) spqr:modernFindSpot (spqr:Object -> spqr:Place)

and then assert that:

sqpr:ancientFindSpot rdfs:subPropertyOf http://open.vocab.org/terms/findspot sqpr:modernFindSpot rdfs:subPropertyOf http://open.vocab.org/terms/findspot

if we decide to support ov:findspot. If we decide to use Europeana we can assert:

spqr:Place owl:sameAs ens:Place

or:

spqr:Date owl:sameAs ens:TimeSpan

Mappings to “other” ontologies should be explicitly recorded in the OWL file.

At first we will extract classes/individuals from instances – our goal is not to build complete ontologies of materials or object types. We may reuse existing ontologies (there may be something in Getty) when found – but this will be done in later stages. Sometimes OWL will not be enough and mapping will require some graph processing – there are several approaches – like rule extensions to OWL.

Analysing instances will require a few analysis scripts. That work on the output of harvesters and therefore overlap with the next stage.

The plan is to:

- Integrate “locally” early.

- Expand integration context to “linked data cloud” lazily.

Write harvesting/RDFizing scripts

January-February 2011

As part of the tool evaluation we have set up a 3store populated with epigraphic data but this data is not linked together – its just discrete sets of data within the same 3store. This task looks at linking the data sets by analysing them in more detail.

Once the initial ontology is set – we will write scripts to harvest and convert documents to RDF. We will not think about URI schemes at this point, but just use an “spqr” namespace unless the URI is obvious (eg. harvesting deferences URIs to get XML representation). The scripts will attempt to automatically map places to Pleiades, parse dates, parse whatever is parseable.

There are three types of script:

- Utility scripts.

- Scripts to probe data sets to see their classes and which will then be manually augmented with text. This will identify problems e.g. there is already myriad classes for e.g. graffiti, insults, curses, epitaphs etc. Will run stats to see frequency of classes, and ignore (but note), classes that are not often used.

- Scripts to RDFize data.

The scripts will be used to explore and convert the data.

We will initially focus on 3 of the following data sets, with the option to return to more later if time permits:

- iAph + iTrip (EpiDoc)

- HGV (via papyri.info)

- APIS (via papyri.info)

- Volterra

- Nomisma

- Preta + Khirbat al-Mudayna al-Aliya (OpenContext)

- Smallfinds (Portable Antiques)

- Ure

- Illion

At this point we should have an integrated dataset inside our triple store. We will be able to browse this and run queries. Reasoning capabilities of the triple store are not important at this point.

The analysis, harvesting and conversion scripts will be written in a scripting language running on a JVM – Java APIs are very popular in the Semantic Web world. We will use Clojure. Some functions should be reusable (like looking up matches from Pleiades).

Decide and implement on derefenceable URI scheme

March 2011

This is probably more important than using existing vocabularies. All non-literal elements in our RDF graph should have dereferencable URIs. We will create schemes for datasets we control. Doing this for “third-party” datasets may require some thought. We will most probably set up a PURL server and just provide URIs ourselves or reuse existing “derived” URIs for example from papyri.info

We will then update the RDFizing scripts accordingly and repopulate the triple store. In cases when we provide a deferencable URI we should have a system in place that will query the 3store to answer HTTP requests. For example when user asks for rdf-xml representation of non-information resource http://purl.spqr/object/09865 the server should query our 3store and give a response.

The 3store will be hosted at KCL.

At this point we will have a 3store with linked data from a number of data sets and dereferencable URIs.

Basic query portal

April-June 2011

We will develop a basic query portal supporting:

- Simple navigation – text based + a simple ‘bread crumb’ (maybe based on some tree-like HTML widget).

- SPARQL endpoint + form based interface for SPARQL queries.

- Free text searches – this will require creating an index of all information resources referred to from our 3store – SOLR-based solution. See also, http://fgiasson.com/blog/index.php/2009/04/29/rdf-aggregates-and-full-text-search-on-steroids-with-solr/ (last accessed, 23/02/11) for possible ideas.

If time permits it will also support:

- Spatial searches – to support this efficiently we will need to have an external spatial index.

- Temporal searches – again – temporal index.

One issue in relation to free-text searches is when to index the documents, before or after injection into the 3store. We want to index the epigraphic documents but not documents from related datasets e.g. DBPedia or Pleiades.

The proposed architecture and components can be seen at Architecture.

Usability evaluation

May 2011 onwards.

End users evaluate the system as it evolves, and their comments contribute to its further development.

Ontology mapping

June 2011

We will refine our “local” ontology devised earlier and then analyse how it can be mapped to the Europeana Data Model. This will enhance the linkability of the data to related data sets. Comments will be fed back to EDM authors as to problems we encounter and suggestions we have. We may also look at other ontologies eg. CIDOC CRM, etc.

Final 3store rebuild

July 2011

Using our extended ontology, we will reharvest the datasets in the 3store and repopulate the 3store. If time permits we’ll also RDFize more datasets and possibly look at web scraping of datasets available online via web forms.

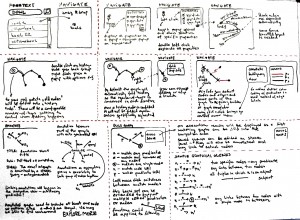

Graphical query builder / annotator

A novel graphical query builder / annotator has been proposed. There is no time to explore this within the scope of the project but the proposal itself is a project output and represents an option for future work.

It introduces a sensible way of annotating knowledge…

There is an issue as to representing annotations as SPARQL and how to query a graph with such annotations:

- Auto-expand any SPARQL annotations and add to graph. If original graph changes then annotation-SPARQL needs rerun and graph updated.

- User runs SPARQL query over graph with annotations but annotation-SPARQL is left un-run, or is then browsed by user (run only when user browses).

- User browses, which runs the query and user can then run SPARQL across original graph + the expanded graph with the results of the annotation-SPARQL.

Running SPARQL on original graph auto-runs the annotation-SPARQL as a side-effect.

Options for UI are JQuery, Flash/FLEX, Silverlight or JavaFX.

Out of scope

The following are also out of scope. These will form the basis for future work:

- Faceted browsing.

- Ontology and instance mapping. Not an issue now, as we define the ontology but will be in general.

- Data sets that are RDF but not LD (no dereferencable URIs).

- Inconsistent data that needs a bit of cleaning.

- URI stability is a social issue. Community/providers agree on who provides then and how they are defined. SPQR is focusing on technology.

- Levels of services and data integration e.g. I get your endpoint OR I get your raw data.

- Links to non-open data.

- Versioning. A link valid between two sets of data may not be valid in future versions. Or a link may be assumed to be valid but may in fact not be and so the link will be removed. Triple stores are starting to support versioning e.g. rollback or create a view of the data at a specific date. Recognised as very important for scientific research.

- Automatic derivation of specific relations e.g. nearness, temporal relatedness etc.

- Automatic extraction and proposal of ontologies from raw data.